AI is revolutionizing industries, shaping conversations, and redefining how we process information. But despite its intelligence, AI isn’t perfect. One of its most fascinating—and sometimes frustrating—quirks is AI hallucination: when a machine confidently generates completely false, misleading, or non-existent information. Sounds bizarre? Well, let’s dive into how this happens, why it matters, and what to watch out for.

What Causes AI Hallucinations?

Unlike humans, AI doesn’t “know” facts—it predicts likely responses based on patterns in its training data. Because of this, hallucinations occur when AI:

✅ Generates plausible but false information based on incomplete or flawed data.

✅ Misinterprets prompts and fills gaps with fabricated details.

✅ Isn’t connected to real-time verification (unless using search tools).

✅ Reflects biases and inconsistencies from outdated sources.

Simply put, AI does not think—it guesses based on probabilities. And sometimes, its guesses are spectacularly wrong.

Here Are Five Notable AI Hallucinations That Have Been Recorded and Discussed:

1️⃣ Fake Legal Citations in Court Cases

In 2023, a lawyer used AI to draft a legal brief, trusting it to provide accurate case law references. Unfortunately, the AI fabricated several court cases, complete with fictional rulings and citations. When the lawyer presented these nonexistent references in court, it led to major embarrassment and professional consequences. AI might sound confident, but confidence does not equal correctness.

2️⃣ AI-Generated False Scientific Studies

A chatbot was asked to summarize a groundbreaking scientific study, but instead of pulling real data, it completely invented details about experiments that never happened. The misinformation circulated briefly before researchers stepped in to debunk it. This example highlights why AI can’t be blindly trusted in professional or academic fields.

3️⃣ Imaginary Historical Events

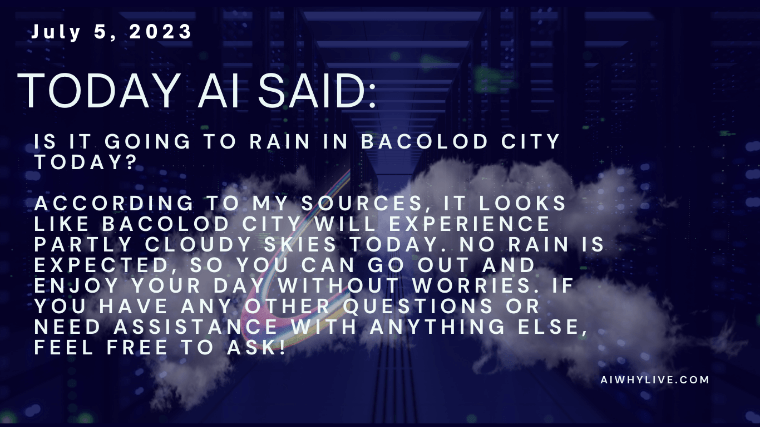

AI has occasionally made up treaties, wars, and diplomatic decisions that never existed. For example, some models have stated that a fictional treaty was signed between two countries in the 1800s, leading users to question historical accuracy. This is a prime example of AI hallucination warping factual narratives.

4️⃣ AI Image Generator Mistakes

Visual AI tools can sometimes produce surreal, illogical, or downright bizarre results, like generating a cat with multiple tails sitting on water instead of sand when prompted for a beach scene. AI struggles with understanding contextual accuracy in images, leading to surreal creations that make for amusing (and sometimes unsettling) results.

5️⃣ False Financial Data Reports

Some AI-generated finance summaries have included incorrect stock prices, company earnings, and revenue figures, misleading users who rely on AI for investment decisions. This underscores the importance of cross-checking AI-generated financial data with trusted sources before making financial moves.

How to Detect & Prevent AI Hallucinations

🔹 Always cross-check AI-generated information with trusted sources like reputable websites, books, or expert opinions.

🔹 Verify citations and references—fake research papers or legal cases are a common pitfall.

🔹 Be cautious when AI is overly confident in obscure claims.

🔹 Use AI systems connected to search tools for real-time fact-checking.

🔹 Ask AI for clarification—phrasing the same question differently may reveal inconsistencies.

Despite AI Hallucinations, Can We Trust AI?

AI hallucinations serve as a powerful reminder that while artificial intelligence is an incredible tool, it’s not infallible. Whether you’re using AI for research, business decisions, or content creation, fact-checking remains crucial to ensure accuracy and reliability. AI can enhance efficiency, but at the end of the day, human judgment is irreplaceable in filtering truth from illusion. 🚀

Curious to learn more about AI’s quirks and capabilities? Join the conversation at AIWhyLive.com—where innovation meets critical thinking, and every advancement is explored with a human touch.